X-ray computed tomography (CT) and laminography are a unique tools for systematic examination of materials and biological samples in various research areas. CT employs the penetrating nature of X-rays to generate attenuation maps of the imaged object. In conventional CT systems, the object is placed on a rotating sample stage between a source emitting a beam of X-rays and a detector measuring the two-dimensional radiographic intensity image of the incident X-rays. As the object rotates with respect to the source-detector assembly, namely the gantry, radiographs are acquired for a set of typically equally spaced rotation positions of the object as it performs 360° revolution with respect to the fixed gantry. A tomographic reconstruction algorithm is then applied to the set of acquired radiographs to generate a volumetric X-ray attenuation map of the scanned object. The attenuation map consists of a three-dimensional array of voxels, each assigned a gray value corresponding to the calculated X-ray attenuation for the volumetric space occupied by the voxel. Laminography is a special kind of X-ray tomography used for flat samples. When a flat sample like for example a printed circuit board is examined in a CT, the X-ray absorption in the sample will increase drastically when the long edge of the sample is nearly parallel to the direction of the X-rays. In such cases it is advantageous to tilt the sample, so the normal to its large plane is not parallel to the incident beam and then rotate the sample around this vector perpendicular to the large plane of the sample.

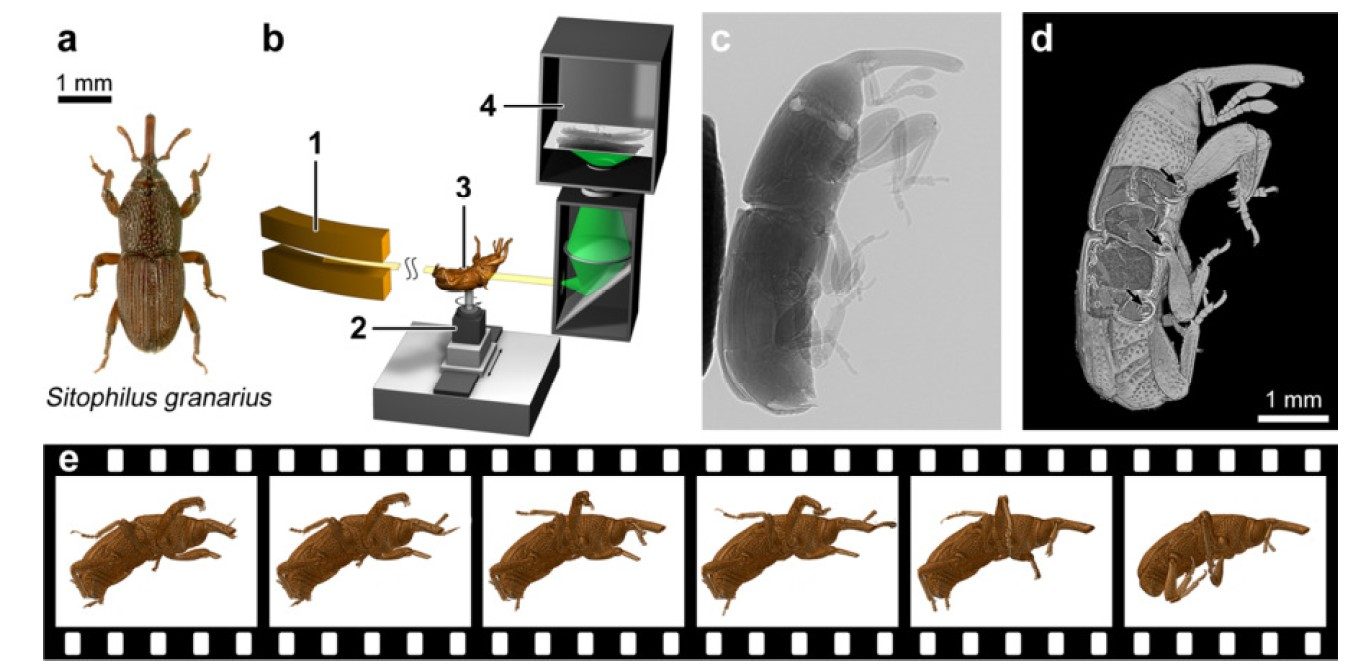

Recent progress of X-ray flux density at synchrotron facilities combined with fast pixel detectors enables scanning times of less than a second. Combined with optical flow analysis, this has enabled new approaches for 4D (space+time) in situ, operando and even in vivo studies, e.g., of the structure evolution of materials and devices during technological processes, or of biological processes in cells, tissues and full small organisms.

Due to the complexity of the experimental setup, automation and software-controlled experiments with X-ray tomography are challenging. For a successful automated scan, the imaging and sample apparatus must be aligned and positioned properly, the sample be stabilized and the measurement setup controlled during the imaging process. Moreover, intelligent control of the imaging process with respect to the unpredictable spatio-temporal localization of the region of interest (ROI) and its evolution is particularly difficult to achieve because it requires information about the process under study. In the worst case the ROI may be completely missed or leave the FOV during the experiment. This problem is especially true if the position of the sample in the 4D space are not known a priori or are changing during the scan. Although two-dimensional (2D) radiographs already contain sufficient information for fast online feedback in many applications, in certain cases control decisions must be based on quality metrics that can only be derived from 3D or 4D image reconstruction.

Furthermore, the resolution and duration of in-vivo experiments with living objects is currently limited by radiation damage. The radiation dose may be reduced by taking fewer projections to reconstruct a single tomogram or by reducing the exposure time for each projection. With traditional reconstruction techniques, both approaches lead to various artifacts in the final images. Motion of the objects during the experiment also affects the reconstruction quality. The compressed sensing theory has demonstrated the feasibility to recover signals from the under sampled data. The incorporation of a sparsity prior can compensate incompleteness of measurements and the images can be reconstructed from fewer number of measurements using non-linear optimization scheme. However, such optimization techniques are very computationally demanding.

In a collaboration with the KIT Imaging Cluster, we develop a new generation control system to enable a new type of feedback driven experiments and to allow tracking of fast processes with high spatial and temporal resolutions. The developed control system is currently operated at two imaging beamlines of the KIT Imaging Cluster. While focusing on synchrotron tomography and laminography, our technologies can be easily adapted to different types of detectors generating image streams with bandwidth up to 8 GB/s and enable online monitoring, real-time data-processing, and data-driven control. The software platform is open-source and all software components are freely available: https://github.com/ufo-kit.

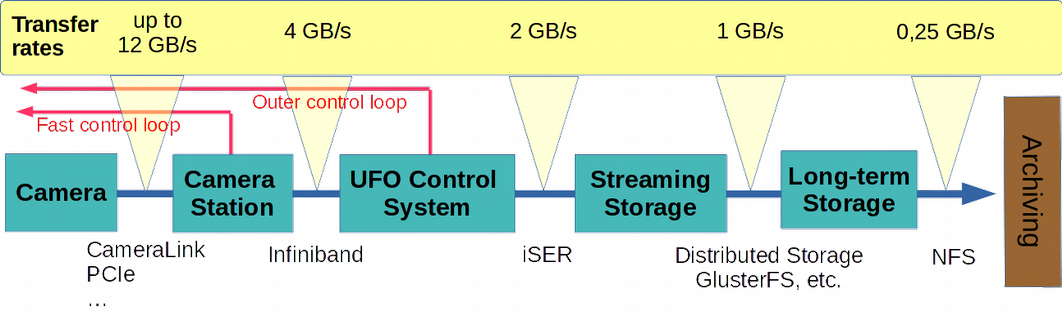

Our UFO (Ultra-Fast tOmography) platform covers the complete data path from the X-ray detector to the long-term data archive. The system includes hardware and software components and proposes a design guideline for the computing infrastructure. Primary design goals were low administrative effort, cost efficient off the shelf components, and the ability to distribute equipment in the synchrotron facility. Important components of the framework are networking, camera framework, computing environment and control system.

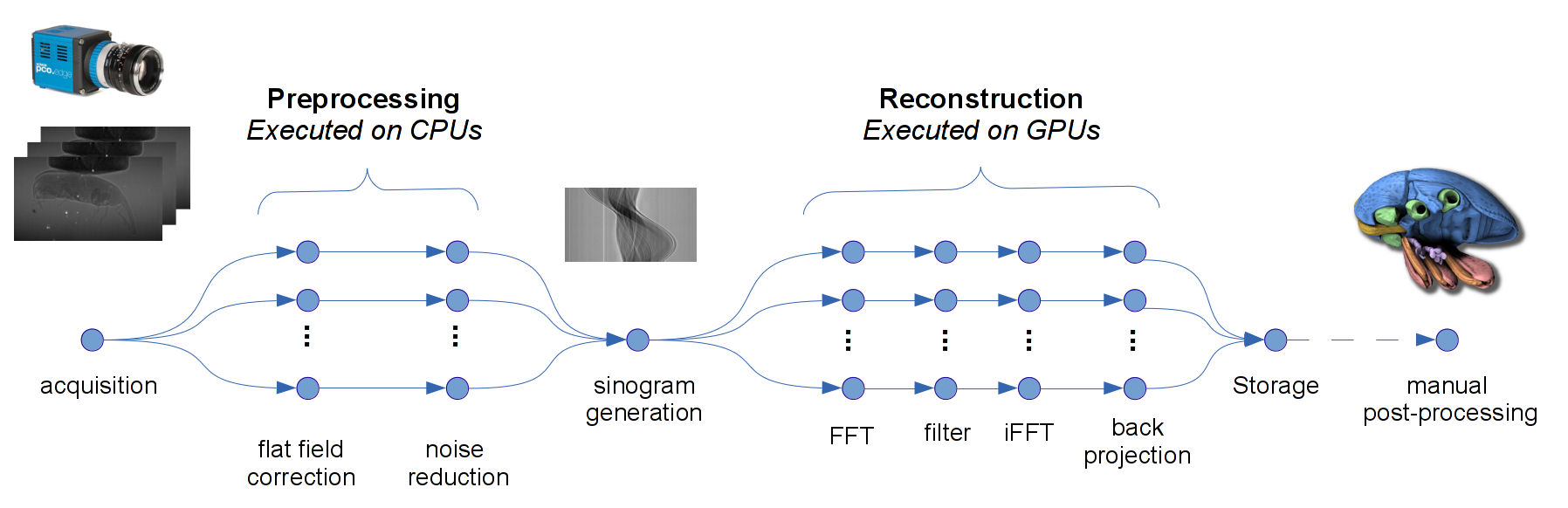

The data flow is organized as sketched in Fig. 2. First, the data is pre-processed using a single GPU directly at the camera station. At this stage the possible feedback based on 2D projections is returned to the beamline instrumentation. Then, after online data reduction, the imaging data is passed to the distributed UFO control system for 3D reconstruction and data analysis. Information for a second feedback loop based on reconstructed 3D images is extracted here. Further, the raw data is streamed to the high-speed streaming storage.

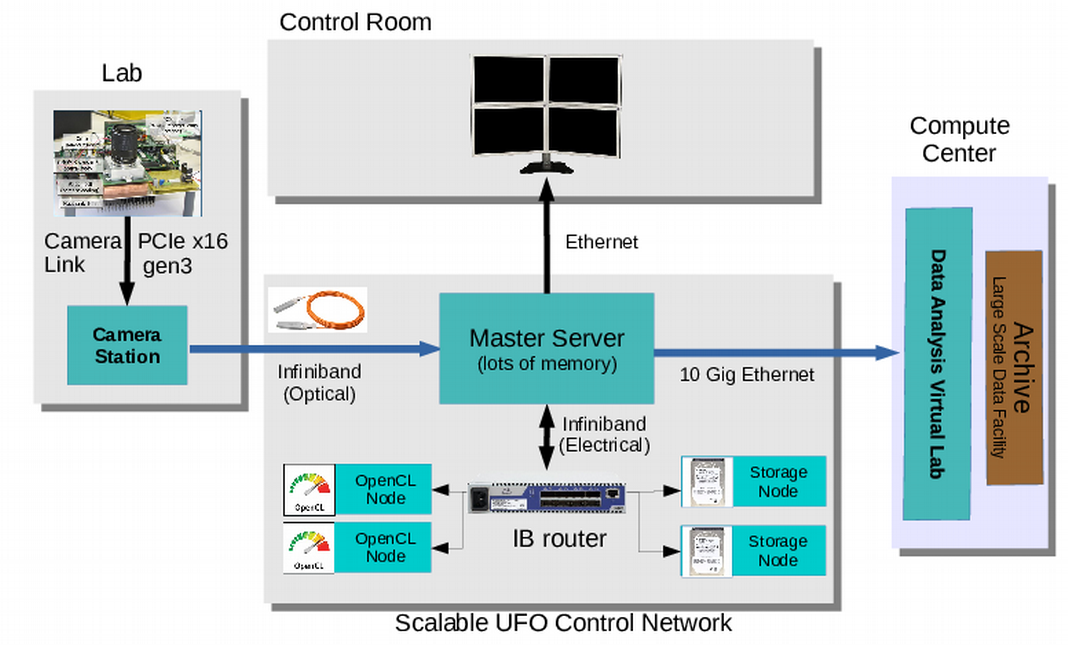

To build the DAQ network we use four distinct types of nodes: camera station, master server, computational nodes, and storage nodes. The architecture is shown in Fig. 3. An Infiniband fabric is used to connect all parts of the platform. Camera stations are located in the beamline hatch, but other components are outside and connected to the camera station using an optical Infiniband link.

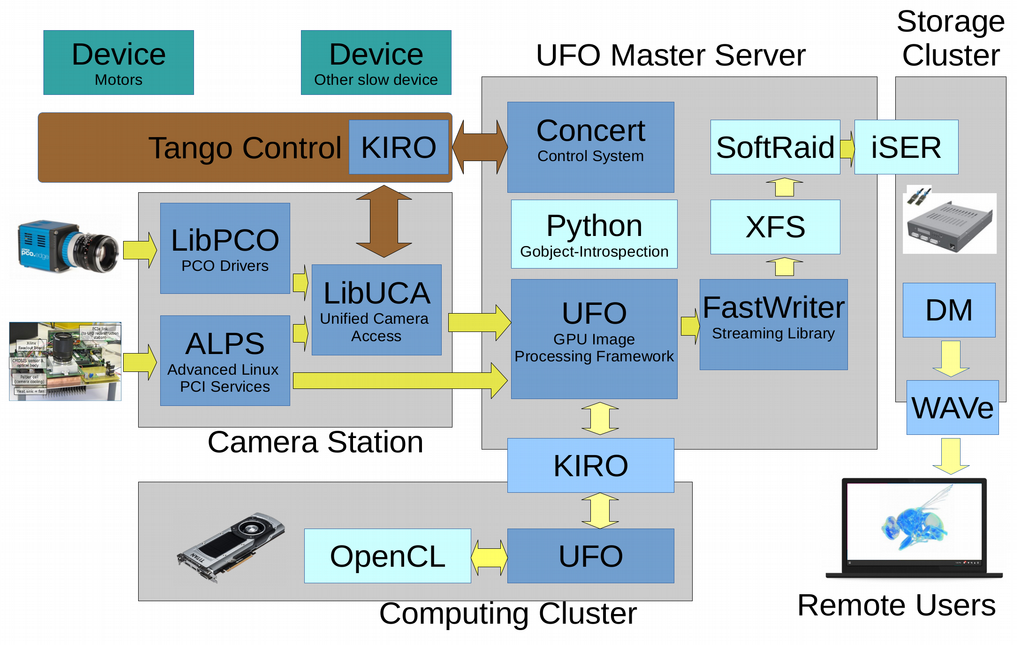

Multiple software components were developed to meet the requirements of the UFO control system. An overview of the software components used to realize the UFO control system is given in Fig. 4. The central element of the system is the parallel UFO image processing framework. The Concert control system enables image-based control loops by integrating the UFO framework and fast beam-line devices. The interface to the cameras is provided by the Unified Camera Access library (libuca). Besides commercial cameras, we have designed a range of in-house streaming cameras serviced by the Alps driver framework. To get full advantage of the high-speed Infiniband network, the KIT Infiniband Remote Communication library KIRO was developed. It supports the native Infiniband RDMA protocol. The library can reach data transfer rates of up to 4.7 GB/s and latencies in the order of 20 us for the Infiniband FDR fabric.

The results of a successful experiment are first streamed to the high-speed storage partition using the FastWriter and, then, archived in the KIT Large Scale Data Facility (LSDF). To allow a quick preview of the archived 3D datasets, the web application Web Analysis of Volumes (WAVe) has been developed. Many recent mobile devices are equipped with powerful GPU adapters and are capable to show the visualization of 3D volumes in WAVe.

UFO image processing framework

The central element of the system is the pipelined UFO image processing framework. It models the image processing workflow by a structured graph where each node represents an operator on the input data. By design, the UFO framework contains multiple levels of parallelism: fine-grained massive parallelism, pipelining and concurrent execution of branches within the graph. The implemented library of image processing algorithms is implemented in OpenCL and carefully optimized to recent architectures from AMD and NVIDIA.

The UFO scheduler is programmed to facilitate multi-GPU and clustered setups. The input for the scheduler is a graph of tasks and the description of the available hardware, like number of GPUs, CPUs and remote compute nodes and how they can be accessed. The scheduler tries to find the best mapping of the tasks to the available hardware. However, in most cases the hardware would be not utilized because there are far more compute units than tasks available. To accommodate for this situation, the scheduler expands the task graph by examining the graph’s structure. Each sequence consisting of tasks that potentially run on GPUs is duplicated and inserted into the task graph. At execution time each of these sequences will then be mapped to the same GPU in order to reduce inter-GPU memory transfers.

The same idea is employed to support clustering: Sequences of long-running computation are identified, encapsulated and a description sent to the remote node. In the original graph, a place holder node sends data to the remote compute node and receives resulting data from, thus re-injecting the data into the original graph. At each computation node, an UFO daemon is started and listening for requests from the main server. When the UFO framework wants to transmit data to a daemon for processing, it sends a request to the daemon, asking it to prepare an empty buffer with enough size to store the data it wants to send. As soon as the daemon has acknowledged this request, the actual data that resides in the buffer is collected and sent to the daemon, which puts it back into the newly created, empty buffer. From this point on, the computing on the daemon continues the same way as in a normal, local UFO chain. And after the computing is done, the same thing happens again, only in the opposite direction.

"Concert" control system

The high-level control system “Concert” is used to manage beam-line devices and control the course of the experiment. It is based on the UFO framework and enables image-based control loops by integrating high-speed compute nodes and fast beam line devices. The control loops are implemented by easy-to-write Python scripts using components provided by “Concert” and the UFO framework. “Concert” defines interfaces for multiple standard components used at imaging beam-lines including high-speed detectors, rotational and linear motors, sample changers, beam monochromators, etc. To realize a common access scheme for different high-speed cameras, the Unified Camera Abstraction Library (libuca) is provided. libuca works with local and remote cameras and is able to achieve transfer rates of 4750 frames per second if 8-bit 1-megapixel camera and FDR Infiniband interconnects are used. To communicate with standard devices a Tango wrapper is used. Easy to use components are provided to work with Aerotech and Compact RIO-based motors and a variety of other devices. “Concert” greatly simplifies standard operations like alignment of rotation axis, focusing of the camera, finding and centering the beam. Finally “Concert” integrates with the data managements system. Metadata is automatically retrieved from the instruments, might be extended by the user and is saved with the measured data.

Real-time Reconstruction

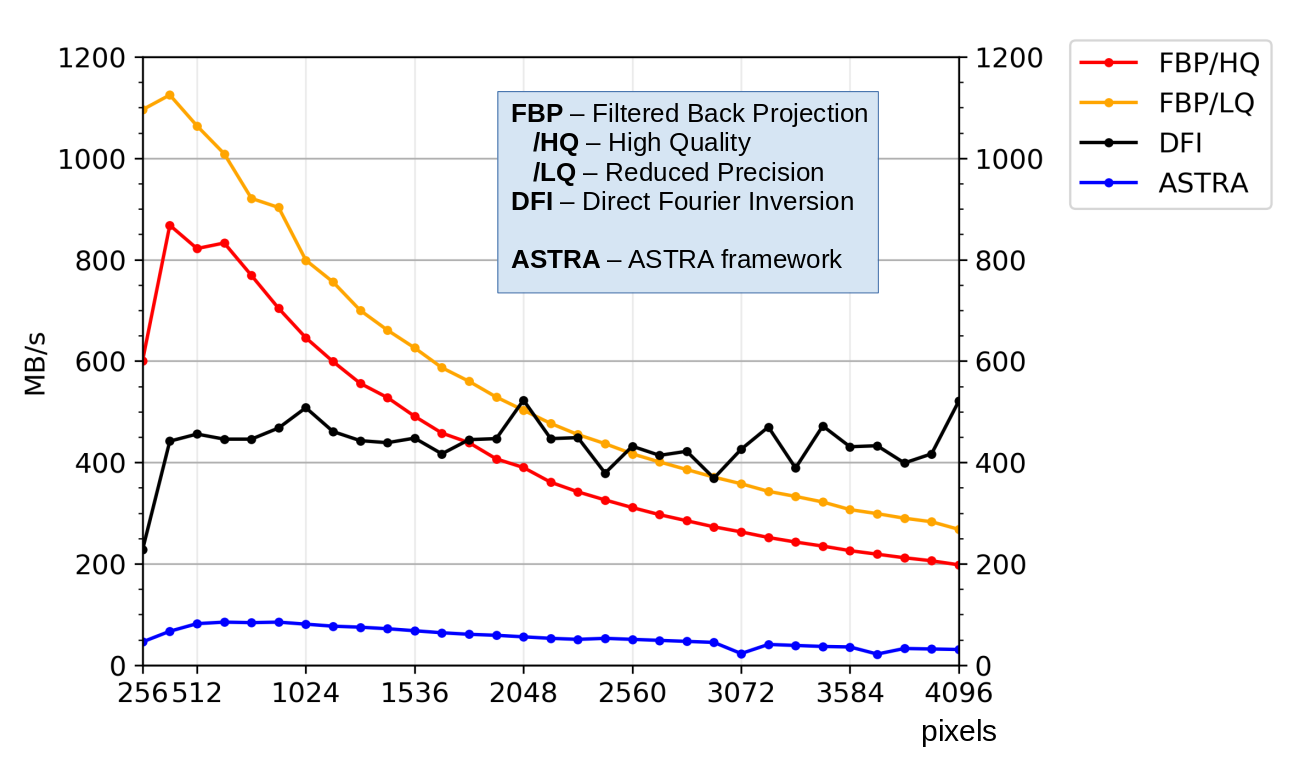

For real-time tomographic reconstruction, we provide two methods: Filtered Back Projection (FBP) and Direct Fourier Inversion (DFI). Compared to the mostly used FBP algorithm, DFI is more sensitive to noise, but it is slightly faster. Both algorithms are adapted to GPU hardware and are optimized. Furthermore, we provide reduced-precision reconstruction modes. This reduction in image quality is often acceptable for real-time applications. It will speed-up execution while the produced results still are sufficient to make reliable control decisions. As shown on Fig. 6, the provided software outperforms the state-of-the-art algorithms in the ASTRA toolkit significantly. The optimized algorithms achieve a throughput of 1 GB/s on a single GPU adapter and up to 8 GB/s per GPU server. This exceeds the data rates of currently existing scientific camera interfaces. Beside real-time reconstruction, the developed algorithms can be also applied to accelerate otherwise slow low-dose reconstruction which is normally performed offline. Further details about developed algorithms are published in S. Chilingaryan et al. “Balancing load of GPU subsystems to accelerate image reconstruction in parallel beam tomography”, In Proc. of SBAC-PAD, Lyon 2018. Available at https://ieeexplore.ieee.org/document/8645862

Experiments and Projects

- UFO

- ROOF

- ASTOR / NOVA

For Students

We regularly offer a variety of student topics related to computed tomography. We expect students to have verses in image processing and good Python programming skills. Some topics also require a good understanding of GPU architectures and prior experience with parallel programming techniques. Please check the current vacancies or contact us if you have good programming skills, are able to work independently and are interested in high performance computing and tomography. The range of topics is broad and can include related tasks:

- General image processing: noise reduction, artifact compensation, etc.

- Development of advanced algorithms for tomographic reconstruction

- Parallelisation and optimization of image processing software

- optical flow, image segmentation and visualization

Publications

Funkner, S.; Niehues, G.; Nasse, M. J.; Bründermann, E.; Caselle, M.; Kehrer, B.; Rota, L.; Schönfeldt, P.; Schuh, M.; Steffen, B.; Steinmann, J. L.; Weber, M.; Müller, A.-S.

2023. Scientific Reports, 13 (1), Art.-Nr.: 4618. doi:10.1038/s41598-023-31196-5

Faragó, T.; Gasilov, S.; Emslie, I.; Zuber, M.; Helfen, L.; Vogelgesang, M.; Baumbach, T.

2022. Journal of Synchrotron Radiation, 29 (3), 916–927. doi:10.1107/S160057752200282X

Ametova, E.; Burca, G.; Chilingaryan, S.; Fardell, G.; J rgensen, J. S.; Papoutsellis, E.; Pasca, E.; Warr, R.; Turner, M.; Lionheart, W. R. B.; Withers, P. J.

2021. Journal of Physics D: Applied Physics, 54 (32), Art.-Nr.: 325502. doi:10.1088/1361-6463/ac02f9

Funkner, S.; Niehues, G.; Nasse, M.; Bründermann, E.; Caselle, M.; Kehrer, B.; Rota, L.; Schönfeldt, P.; Schuh, M.; Steffen, B.; Steinmann, J.; Weber, M.; Müller, A.-S.

2020, September 24. 8th MT ARD ST3 Meeting 2020, Karlsruhe, Deutschland (2020), Online, September 23–24, 2020

Raasch, J.; Thoma, P.; Scheuring, A.; Ilin, K.; Wünsch, S.; Siegel, M.; Smale, N.; Judin, V.; Hiller, N.; Müller, A.-S.; Semenov, A.; Hübers, H.-W.; Caselle, M.; Weber, M.; Hänisch, J.; Holzapfel, B.

2013, February 1. KSETA Inauguration Symposium (2013), Karlsruhe, Germany, February 1, 2013

Chilingaryan, S. A.

2020, May 12. Lunch and learn session at Photon Science Institute, University of Manchester (2020), Manchester, United Kingdom, May 12, 2020

Funkner, S.; Niehues, G.; Nasse, M. J.; Bründermann, E.; Caselle, M.; Kehrer, B.; Rota, L.; Schönfeldt, P.; Schuh, M.; Steffen, B.; Steinmann, J. L.; Weber, M.; Müller, A.-S.

2019

Chilingaryan, S.; Ametova, E.; Kopmann, A.; Mirone, A.

2019. Journal of real-time image processing, 17 (5), 1331–1373. doi:10.1007/s11554-019-00883-w

Chilingaryan, S. A.

2019. YerPhI Seminar (2019), Yerevan, Armenia, May 16, 2019

Chilingaryan, S.; Ametova, E.; Kopmann, A.; Mirone, A.

2019. 2018 30th International Symposium on Computer Architecture and High Performance Computing: SBAC-PAD 2018 ; Lyon, France, 24-27 September 2018 ; Proceedings, 158–166, Institute of Electrical and Electronics Engineers (IEEE). doi:10.1109/CAHPC.2018.8645862

Asadchikov, V.; Buzmakov, A.; Chukhovskii, F.; Dyachkova, I.; Zolotov, D.; Danilewsky, A.; Baumbach, T.; Bode, S.; Haaga, S.; Hänschke, D.; Kabukcuoglu, M.; Balzer, M.; Caselle, M.; Suvorov, E.

2018. Journal of applied crystallography, 51 (6). doi:10.1107/S160057671801419X

Ametova, E.; Ferrucci, M.; Chilingaryan, S.; Dewulf, W.

2018. Precision engineering, 54, 233–242. doi:10.1016/j.precisioneng.2018.05.016

Haas, D.; Mexner, W.; Spangenberg, T.; Cecilia, A.; Vagovic, P.; Kopmann, A.; Balzer, M.; Vogelgesang, M.; Pasic, H.; Chilingaryan, S.

2012. 9th International Workshop on Personal Computers and Particle Accelerator Controls (PCaPAC 2012), Kolkata, IND, December 4-7, 2012, 103–105, JACoW Publishing

Kopmann, A.; Chilingaryan, S.; Vogelgesang, M.; Dritschler, T.; Shkarin, A.; Shkarin, R.; Santos Rolo, T. dos; Farago, T.; Kamp, T. Van de; Balzer, M.; Caselle, M.; Weber, M.

2016. 2016 IEEE Nuclear Science Symposium, Medical Imaging Conference and Room-Temperature Semiconductor Detector Workshop (NSS/MIC/RTSD), Strasbourg, France, 29 October–6 November 2016, 1–5, Institute of Electrical and Electronics Engineers (IEEE). doi:10.1109/NSSMIC.2016.8069895

Ametova, E.; Ferrucci, M.; Chilingaryan, S.; Dewulf, W.

2018. Measurement science and technology, 29 (6), Art. Nr.: 065007. doi:10.1088/1361-6501/aab1a1

Jerome, N. T.; Ateyev, Z.; Lebedev, V.; Hopp, T.; Zapf, M.; Chilingaryan, S.; Kopmann, A.

2017. Proceedings of the International Workshop on Medical Ultrasound Tomography: 1.- 3. Nov. 2017, Speyer, Germany. Hrsg.: T. Hopp, 349–359, KIT Scientific Publishing. doi:10.5445/IR/1000080283

Jerome, N. T.; Chilingaryan, S.; Shkarin, A.; Kopmann, A.; Zapf, M.; Lizin, A.; Bergmann, T.

2017. IVAPP 2017 : 8th International Conference on Information Visualization Theory and Applications, Porto, Portugal, 27. Februar - 1. März.2017. Vol.: 3. Ed.: L. Linsen (IVAPP is part of VISIGRAPP, the 12th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications), 152–163, SciTePress. doi:10.5220/0006228101520163

Schmelzle, S.; Heethoff, M.; Heuveline, V.; Lösel, P.; Becker, J.; Beckmann, F.; Schluenzen, F.; Hammel, J. U.; Kopmann, A.; Mexner, W.; Vogelgesang, M.; Jerome, N. T.; Betz, O.; Beutel, R.; Wipfler, B.; Blanke, A.; Harzsch, S.; Hörnig, M.; Baumbach, T.; Kamp, T. Van de

2017. Developments in X-Ray Tomography XI, San Diego, CA, August 6-10, 2017. Ed.: B. Müller, 103910P, Society of Photo-optical Instrumentation Engineers (SPIE). doi:10.1117/12.2275959

Farago, T.; Mikulik, P.; Ershov, A.; Vogelgesang, M.; Hänschke, D.; Baumbach, T.

2017. Journal of synchrotron radiation, 24 (6), 1283–1295. doi:10.1107/S1600577517012255

Ametova, E.; Ferrucci, M.; Chilingaryan, S.; McCarthy, M.; Dewulf, W.

2016. 31st Annual Meeting of the American Society for Precision Engineering (ASPE), Portland, OR, USA; 23 - 28 October 2016, 287–292, ASPE

Vogelgesang, M.; Rota, L.; Perez, L. E. A.; Caselle, M.; Chilingaryan, S.; Kopmann, A.

2016. Developments in X-Ray Tomography X, San Diego, United States, 29 - 31 August, 2016, Art. Nr.: 996715, Society of Photo-optical Instrumentation Engineers (SPIE). doi:10.1117/12.2237611

Kopmann, A.; Chilingaryan, S.; Vogelgesang, M.; Dritschler, T.; Shkarin, A.; Shkarin, R.; Santos Rolo, T. dos; Farago, T.; Kamp, T. van de; Balzer, M.; Caselle, M.; Weber, M.; Baumbach, T.

2016. IEEE Nuclear Science Symposium (NSS) and Medical Imaging Conference (MIC), Strasbourg, F, October 29 - November 5, 2016

Vogelgesang, M.; Rota, L.; Ardila Perez, L. E.; Caselle, M.; Chilingaryan, S.; Kopmann, A.

2016. Developments in X-Ray Tomography X, San Diego, CA, August 29-31, 2016. Ed.: S.R. Stock, 996715/1–9, Society of Photo-optical Instrumentation Engineers (SPIE)

Vogelgesang, M.; Rota, L.; Ardila Perez, L. E.; Caselle, M.; Chilingaryan, S.; Kopmann, A.

2016. Developments in X-Ray Tomography X, San Diego, CA, August 29-31, 2016

Vogelgesang, M.; Farago, T.; Morgeneyer, T. F.; Helfen, L.; Dos Santos Rolo, T.; Myagotin, A.; Baumbach, T.

2016. Journal of synchrotron radiation, 23, 1254–1263. doi:10.1107/S1600577516010195

Chilingaryan, S.

2016. Topical Workshop on Parallel Computing for Data Acquisition and Online Monitoring, Karlsruhe, February 7-8, 2016

Kamp, T. Van de; Santos Rolo, T. dos; Farago, T.; Kopmann, A.; Vogelgesang, M.; Chilingaryan, S.; Ershov, A.; Baumbach, T.

2015. 12th International Conference on Synchrotron Radiation Instrumentation (SRI 2015), New York, N.Y., July 6-10, 2015

Chilingaryan, S.; Ametova, E.; Buldygin, R.; Shkarin, A.; Vogelgesang, M.

2015. YerPhI CRD Seminar, Yerevan Physics Institute, Yerevan, ARM, May 19, 2015

Stevanovic, U.; Caselle, M.; Cecilia, A.; Chilingaryan, S.; Farago, T.; Gasilov, S.; Herth, A.; Kopmann, A.; Vogelgesang, M.; Balzer, M.; Baumbach, T.; Weber, M.

2015. IEEE transactions on nuclear science, 62, 911–918. doi:10.1109/TNS.2015.2425911

Shkarin, R.; Ametova, E.; Chilingaryan, S.; Dritschler, T.; Kopmann, A.; Mirone, A.; Shkarin, A.; Vogelgesang, M.; Tsapko, S.

2015. Fundamenta informaticae, 141 (2-3), 245–258. doi:10.3233/FI-2015-1274

Vogelgesang, M.

2014. Karlsruher Institut für Technologie (KIT). doi:10.5445/IR/1000048019

Vogelgesang, M.

2015. Vortr.: Helmholtz-Zentrum Dresden-Rossendorf, 11.Mai 2015

Vogelgesang, M.

2015. PNI-HDRI Spring Meeting, DESY, Hamburg, April 13-14, 2015

Vondrous, A.; Jejkal, T.; Dapp, R.; Stotzka, R.; Mexner, W.; Ressmann, D.; Mauch, V.; Kopmann, A.; Vogelgesang, M.; Farago, T.; Kamp, T. van de

2015. 3rd ASTOR Working Day, Karlsruhe, March 19, 2015

Khokhriakov, I.; Lottermoser, L.; Gehrke, R.; Kracht, T.; Wintersberger, E.; Kopmann, A.; Vogelgesang, M.; Beckmann, F.

2014. Optics and Photonics 2014, San Diego, Calif., August 17-21, 2014

Kopmann, A.; Balzer, M.; Caselle, M.; Chilingaryan, S.; Dritschler, T.; Farago, T.; Herth, A.; Rota, L.; Stevanovic, U.; Vogelgesang, M.; Weber, M.

2014. Science 3D Workshop, Hamburg June 2-4, 2014

Khokhriakov, I.; Lottermoser, L.; Gehrke, R.; Kracht, T.; Wintersberger, E.; Kopmann, A.; Vogelgesang, M.; Beckmann, F.

2014. Developments in X-Ray Tomography IX : Proceedings of Optics and Photonics 2014, San Diego, CA, August 18-20, 2014. Ed.: S. R. Stock, Article no 921217, Society of Photo-optical Instrumentation Engineers (SPIE). doi:10.1117/12.2060975

Lytaev, P.; Hipp, A.; Lottermoser, L.; Herzen, J.; Greving, I.; Khokhriakov, I.; Meyer-Loges, S.; Plewka, J.; Burmester, J.; Caselle, M.; Vogelgesang, M.; Chilingaryan, S.; Kopmann, A.; Balzer, M.; Schreyer, A.; Beckmann, F.

2014. Optics and Photonics 2014, San Diego, Calif., August 17-21, 2014

Lytaev, P.; Hipp, A.; Lottermoser, L.; Herzen, J.; Greving, I.; Khokhriakov, I.; Meyer-Loges, S.; Plewka, J.; Burmester, J.; Caselle, M.; Vogelgesang, M.; Chilingaryan, S.; Kopmann, A.; Balzer, M.; Schreyer, A.; Beckmann, F.

2014. Developments in X-Ray Tomography IX : Proceedings of Optics and Photonics 2014, San Diego, CA, August 18-20, 2014. Ed.: S. R. Stock, Article no 921218, Society of Photo-optical Instrumentation Engineers (SPIE). doi:10.1117/12.2061389

Vogelgesang, M.

2014. Workshop on Fast Data Processing on GPUs, Dresden, May 15, 2014

Vogelgesang, M.

2014. Science 3D Workshop, Hamburg June 2-4, 2014

Vogelgesang, M.

2014. PaNdata ODI Open Workshop (POOW’14), Amsterdam, NL, September 25, 2014

Chilingayan, S.; Balzer, M.; Caselle, M.; Santos Rolo, T. dos; Dritschler, T.; farago, T.; Kopmann, A.; Stevanovic, U.; Kamp, T. van de; vogelgesang, M.; Asadchikov, V.; Baumbach, T.; Myagotin, A.; Tsapko, S.; Weber, M.

2014. Deutsche Tagung für Forschung mit Synchrotronstrahlung, Neutronen und Ionenstrahlen an Großgeräten (SNI 2014), Bonn, 21.-23. September 2014

Vogelgesang, M.; Kopmann, A.; Farago, T.; Santos Rolo, T. dos; Baumbach, T.

2013. 14th International Conference on Accelerator and Large Experimental Physics Control Systems, San Francisco, Calif., October 6-11, 2013

Vogelgesang, M.; Kopmann, A.; Farago, T.; Santos Rolo, T. dos; Baumbach, T.

2013. 14th International Conference on Accelerator and Large Experimental Physics Control Systems, San Francisco, Calif., October 6-11, 2013 Proceedings Publ.Online Paper TUPPC044

Vogelgesang, M.

2014. KSETA Plenary Workshop, Bad Herrenalb, 24.-26.Februar 2014

Vogelgesang, M.

2013. HDRI/PanData Workshop, Hamburg, 4.-5.März 2013

Vogelgesang, M.; Farago, T.; Rolo, T.; Kopmann, A.; Mexner, W.; Baumbach, T.

2013. 27th Tango Collaboration Meeting, Barcelona, E, May 21-24, 2013

Chilingaryan, S.; Kopmann, A.; Myagotin, A.; Vogelgesang, M.

2011. Workshop on IT Research and Development at ANKA, Karlsruhe, July 19, 2011

Haas, D.; Mexner, W.; Spangenberg, T.; Cecilia, A.; Kopmann, A.; Balzer, M.; Vogelgesang, M.; Pasic, H.; Chilingaryan, S.

2012. 9th Internat.Workshop on Personal Computers and Particle Accelerator Controls (PCaPAC-2012), Kolkata, IND, December 4-7, 2012

Vogelgesang, M.; Chilingaryan, S.; Santos Rolo, T. dos; Kopmann, A.

2012. 14th Internat.Conf.on High Performance Computing and Communication (HPCC9) and 9th Internat.Conf.on Embedded Software and Systems (ICESS), Liverpool, GB, June 25-27, 2012

Vogelgesang, M.; Chilingaryan, S.; Santos Rolo, T. dos; Kopmann, A.

2012. 14th Internat.Conf.on High Performance Computing and Communication (HPCC9) and 9th Internat.Conf.on Embedded Software and Systems (ICESS), Liverpool, GB, June 25-27, 2012. Ed.: G. Min, 824–829, Institute of Electrical and Electronics Engineers (IEEE). doi:10.1109/HPCC.2012.116

Chilingaryan, S. A.; Caselle, M.; Kamp, T. van de; Kopmann, A.; Mirone, A.; Stevanovic, U.; Santos Rolo, T. dos; Vogelgesang, M.

2012. GPU Technology Conf.2012 (GTC), San Jose, Calif., May 14-17, 2012

Vogelgesang, M.; Chiligaryan, S.; Kopmann, A.

2011. Internat.Conf.for High Performance Computing, Networking, Storage and Analysis (SC’11), Seattle, Wash., November 12-18, 2011

Balzer, M.; Caselle, M.; Chilingaryian, S.; Herth, A.; Kopmann, A.; Stevanovic, U.; Vogelgesang, M.; Santos Rolo, T. dos

2012. Tagung der Studiengruppe für Elektronische Instrumentierung (SEI), Dresden-Rossendorf, 12.-14.März

Chilingaryan, S.; Vogelgesang, M.; Kopmann, A.

2011. Meeting of the High Data Rate Processing and Analysis Initiative (HDRI), Hamburg, May 16, 2011

Chilingaryan, S.

2011. Meeting on Tomographic Reconstruction Software, Grenoble, F, March 30 - April 1, 2011

Chilingaryan, S.

2009. Chen, L. [Hrsg.] Database Systems for Advanced Applications : DASFAA 2009 Internat.Workshops: BenchmarX, MCIS, WDPP, PPDA, MBC, PhD, Brisbane, AUS, April 20-23, 2009 Berlin [u.a.] : Springer, 2009 (Lecture Notes in Computer Science ; 5667)

Chilingaryan, S.

2009. Chen, L. [Hrsg.] Database Systems for Advanced Applications : DASFAA 2009 Internat.Workshops: BenchmarX, MCIS, WDPP, PPDA, MBC, PhD, Brisbane, AUS, April 20-23, 2009 Berlin [u.a.] : Springer, 2009 (Lecture Notes in Computer Science ; 5667), 21–34